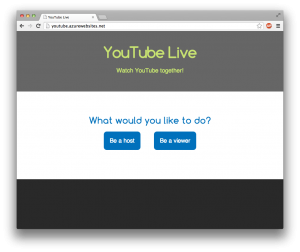

For my two-day solo Minimum Viable Product Project, I decided to make a website for sharing synchronized YouTube videos. This website would be good for people who want to watch a YouTube video together, at the same time, but aren’t in the same room. (Or maybe they are in the same room, but want to watch on separate devices.)

The user who enters a URL (a host) can play, pause, and seek the video. The users who watch the video (the viewers) will have their embedded players behave exactly the same as the host’s player. Pretty simple.

Scoping

My original idea had more features, but I quickly learned that I had to cut down the scope, and then cut it down again for it to be do-able in two days. I was going to have user sessions/authentication, rooms, and a chat box. I was also going to use Angular. But all those went away.

Requirements

The tools that I ended up using for this project are:

- Node.js for the server

- Firebase for the database

- jQuery for getting the URL from the input box

- YouTube Player IFrame API

Player API Functions

After embedding the YouTube player on my website, I needed access to some of the functionality. With the API, I was able to use these functions:

First, I use loadVideoById() to load a YouTube video when a URL is submitted.

Then when the host’s player starts playing, the viewers will invoke playVideo() , otherwise pauseVideo() in all other cases. I don’t use stopVideo() because that will stop the player from buffering.

When the host is in paused state and moves the video to a new time position, this will invoke getCurrentTime() . The viewers will invoke seekTo() to be at the new location.

Database

I’ve spent 15 minutes playing with Firebase during one of the other projects before, so I know how easy it is to set and get data. I love how Firebase automatically sends a trigger back to the website when a value is changed in the database.

In my Firebase database, I store 3 things:

- The state of the host’s video player (playing, paused, buffering, etc.)

- The YouTube video ID that the host is watching

- The time position of the host’s video player

Whenever host played or paused a video, Firebase sent a trigger which would invoke a callback function to play/pause all of the viewers’ video players. It was pretty simple.

If the host seeked the video ahead, Firebase would get the new time position, which would then again trigger a callback on the viewers’ video players and move them to the new time.

Same thing will happen when the host enters a new YouTube video ID.

Extra Feature

I also had some extra time, so I was able to add a search feature. The host can input search terms, and the first video result of the query will be loaded.

This is done by using the loadPlaylist() function and passing this object for the argument:

{

listType: 'search',

list: 'the keywords to search'

}Using this function will actually load a playlist of the first 20 videos found in the search, which I don’t want. So when the first video is playing, I invoke getVideoUrl() to get the video’s URL, then I save the video ID to Firebase, and then play the video by ID to clear the playlist.

Stumbling Block

An obstacle that I ran into about 1 hour into the project was that I chose to use the YouTube Player JavaScript API. That doesn’t seem like a problem at all, right? Well, it wouldn’t be if the JavaScript API was able to embed the HTML5 video player. However, it only embeds the Flash video player, which iPhones and Androids don’t support! So the solution was kind of simple; I switched to the YouTube Player IFrame API.

Final Result

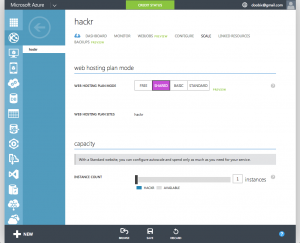

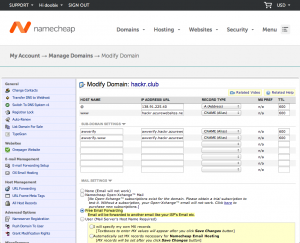

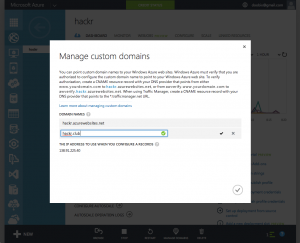

I deployed my code onto the Microsoft Azure platform, and you can view it here: http://youtube.azurewebsites.net/